Google’s Project Relate app is a new, machine-learning-based search app to facilitate communication for people with speech disabilities. The Project Relate app will be available for beta testing in Australia, Canada, New Zealand, and the United States. Google plans to test this with native English speakers who are willing to participate.

In a blog post, Google said it is looking to help people with speech disorders, caused by conditions such as stroke, ALS, Parkinson’s disease, or even traumatic brain injury.

“We realized in 2018 that speech recognition could be improved to be more accessible to people whose speech has been affected by illness. But classical speech recognition technology doesn’t always work as well for those with atypical speech, simply because we don’t have a lot of training data to train the algorithms on the examples, ”explained Julie Cattiau, chef. product at Google Research. a call with selected media.

In order to access the Relate project, in 2019 Google launched a participatory data program called Euphonia, which collected examples of how people with different speech disabilities sound. Cattiau revealed that Google was working with “several partner organizations like ALS TDI, the Canadian Down Syndrome Society and Team Gleason in the United States” to help them identify who would participate in the project. Google ultimately relied on over a million voice samples to create the app.

According to the video presented by Google, a user with a speech impairment is able to speak to the app, which is then able to express their request to another user. The user can also talk to Google Assistant from the app, which can perform the user’s request.

Those who join the first tests for Project Relate will be asked to record a set of sentences. Cattiau revealed that the app requires 500 examples from the user to work accurately, and it can take 30-90 minutes to create a custom template.

“If after 250 sentences we find that the accuracy of the model is good enough, we release the model sooner, but that may not be the case for all users. It depends on the severity of their speech impediment, ”she added.

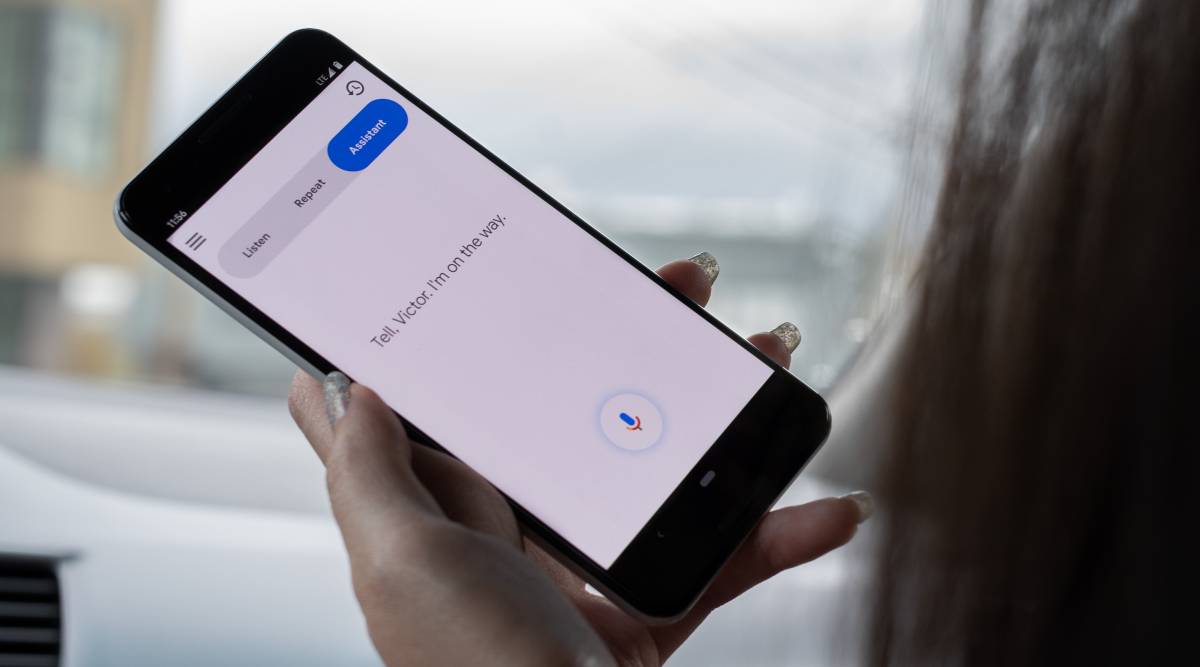

The app will then use these phrases to automatically learn to better understand the user’s unique speech patterns and give them access to the three main features of the app, namely Listen, Repeat and Assist.

With the listening function, the app transcribes the user’s speech into text in real time, so they can copy and paste the text into other apps or let people read whatever they want to say to them. . Repeat is a feature where the user talks to the app and then the app repeats the same thing in a “clear, synthesized voice” to those around them.

“With the listening functionality, which we have demonstrated in the app, we start with the basic speech recognition model which is also used in G board. This is an on-device voice recognition model, so it doesn’t require internet access to work, ”Cattiau revealed, adding that Relate was using much of the technology already developed at Google and personalizing it. for each individual.

With Relate’s voice models, Google tries to cover certain areas to make sure the model is accurate. “There are a number of phrases in the Relate app that are queries for Google assistants and the goal behind that is to make the model more robust to queries that are supposed to be said to the Google Assistant. We also have everyday conversation phrases, ”she said. Users of the app will also be able to create custom phrases that they would like to say to the assistant. According to Google, this will help the app model to become more robust and more personal for each user.

The Assistant feature, of course, allows the speaker to speak directly to the Google Assistant from the Relate app to perform different tasks. Google says it has also worked with Aubrie Lee, brand manager at the company, whose speech is affected by muscular dystrophy. “Project Relate can be the difference between a confused look and a friendly, grateful laugh,” Aubrie said in the video.

When asked if Google plans to expand this to other languages, Cattiau said they start with English first and hope to add support for other languages later. Japanese is a language they are actively studying right now for the app.

“Amateur web enthusiast. Award-winning creator. Extreme music expert. Wannabe analyst. Organizer. Hipster-friendly tv scholar. Twitter guru.”